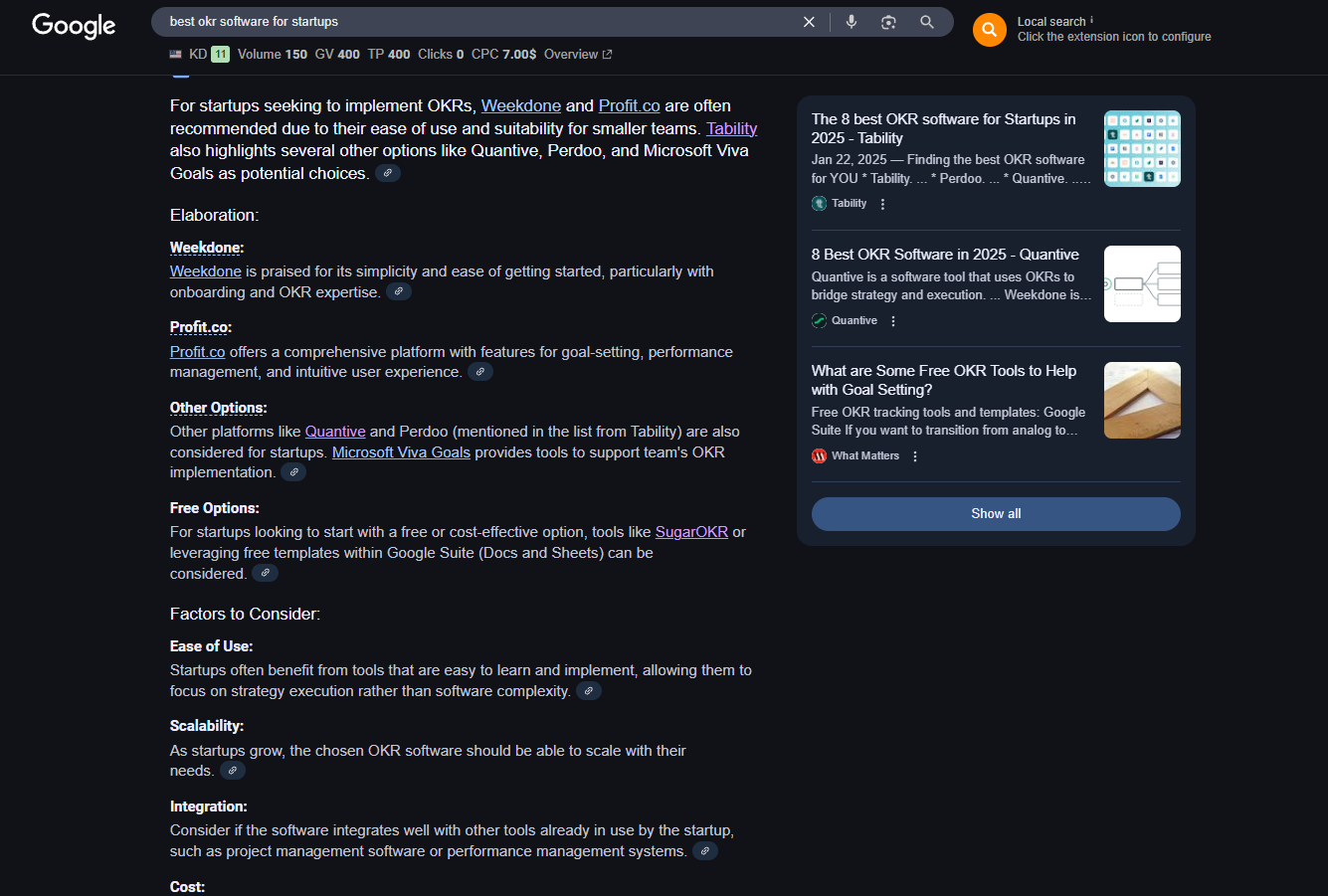

TL;DR

This article takes a deep dive into the difference between SEO and GEO. You'll come away knowing the overlap between LLMs and Google Search, how to optimize for GEO, and how SEO might change in the future due to LLMs.

“How do I show up on AI overviews?"

It's a question that seems to come up in every client call these days.

Everyone wants to know the difference between SEO and GEO.

Whether they should go all-in on GEO, split their efforts, or just let AI overviews come naturally.

And rightfully so, the narrative around SEO can seem stressful, especially if it’s a channel you rely on heavily for revenue growth.

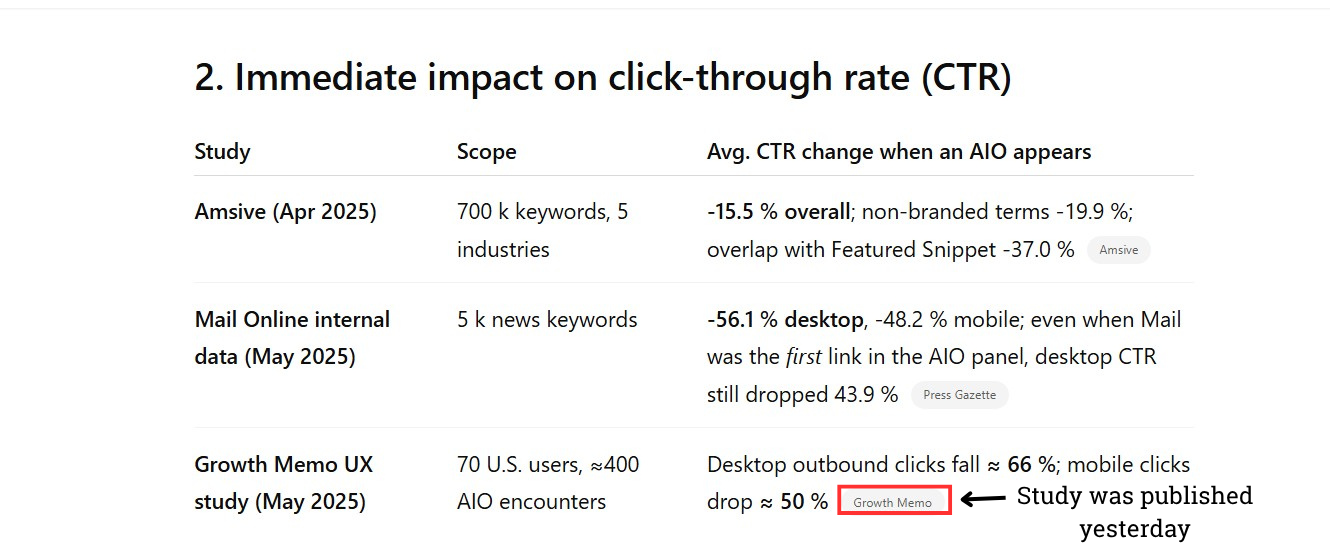

With AI overviews reducing clicks by 35%, Google shifting towards a full AI-generated SERP, and LLM usage growing exponentially by the day, it’s hard to keep up with everything that’s changing.

But does all this noise mean SEO is dying, like some on LinkedIn would have you believe?

Not really.

Consumers will always seek out human answers, whether it’s from a traditional search engine or a large language model.

But with the right strategy, you can optimize for all types of search.

And that starts with understanding what GEO is and how it works.

So, to clear up the confusion, I decided to put this article together.

No fluff. Just practical insights you can use to guide your strategy.

What Is Generative Engine Optimization?

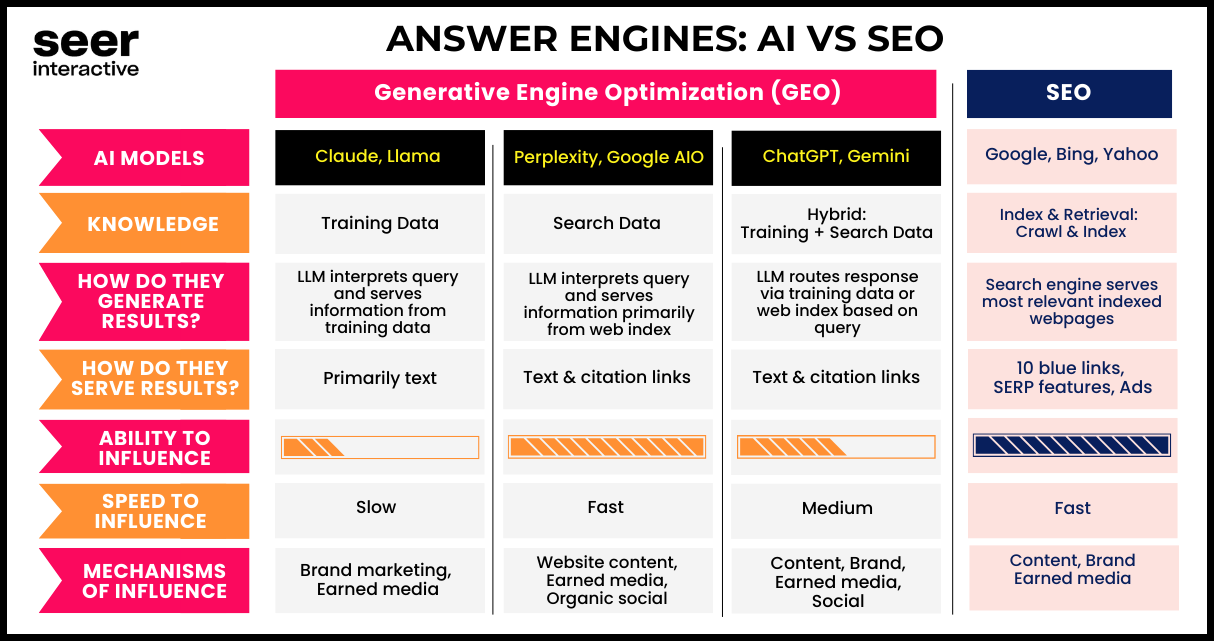

At its core, GEO focuses on optimizing your website, brand, and content for large language models (LLMs), such as ChatGPT, Claude, Perplexity, and now AI overviews.

Whether you call it Answer Engine Optimization, Large Language Model Optimization, or just AI search, the goal is the same.

Get featured more in LLMs and be cited as either a source or answer in the outputs.

Think of it as SEO, but for AI instead of Google.

The main difference, however, is that users don’t have to click site-to-site to find what they’re looking for.

An LLM can now provide summaries and citations based on the data it has access to.

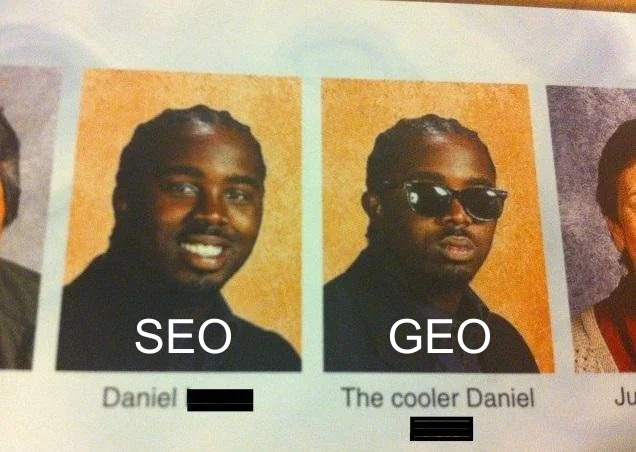

GEO vs SEO: A Familiar, But Different Game

So, is GEO a different playing field than SEO?

If you’re still doing outdated SEO based on keyword stuffing, then yes.

But Google has evolved as a platform over the last 15 years, moving away from traditional keyword matching to a more entity- and intent-driven platform.

To really answer this question, we have to look at how both engines are built.

How Does Google Search Work?

Google search basically works in three stages:

- Crawling: Google uses bots to crawl the web and download text, images, and videos from every website and page it lists.. These automated bots are constantly crawling the web and processing new information to find new, relevant content to show searchers.

- Indexing: Once Google has crawled these pages, it stores the information in its index, which is a massive database containing hundreds of billions of pages.

- Ranking Results: When a user then performs a search, Google returns the information/pages that are most relevant to the user’s query.

But how does Google determine the relevance of a user’s query?

Traditionally, Google relied on keyword matching to find pages that contained wording similar to the user’s search query.

Over time, however, Google's algorithms have continually evolved to deliver better results by understanding key signals such as:

Meaning of Your Query

This key signal determines the intent behind your search.

In fact, Google has built internal language models that help them decipher what you’re looking for and what result would be most relevant.

Traditionally, Google would rely on exact match keywords to match to other articles that contain that same keyword.

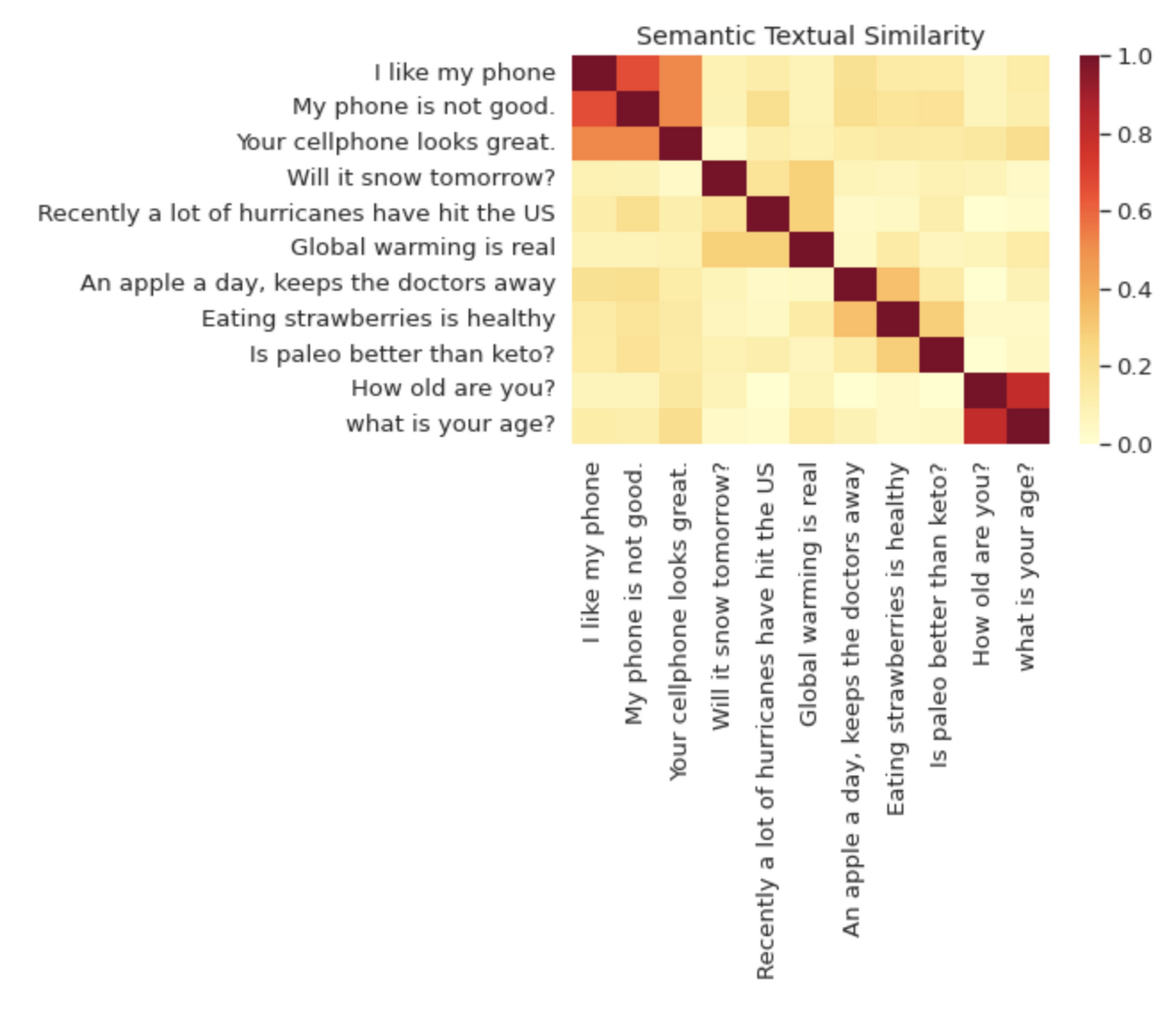

While Google still uses this system in the form of lexical search (matching word strings), they have improved their systems to include semantic search as well (understanding words and relationships based on numeric values).

So now, instead of relying only on the exact word you’re using, Google’s systems look at the overall intent and meaning behind your search.

It’s a way for them to connect words to each other and understand what your attempted search might’ve been.

That way, they can show you relevant results, even if you spelled something wrong or your search isn’t a direct match.

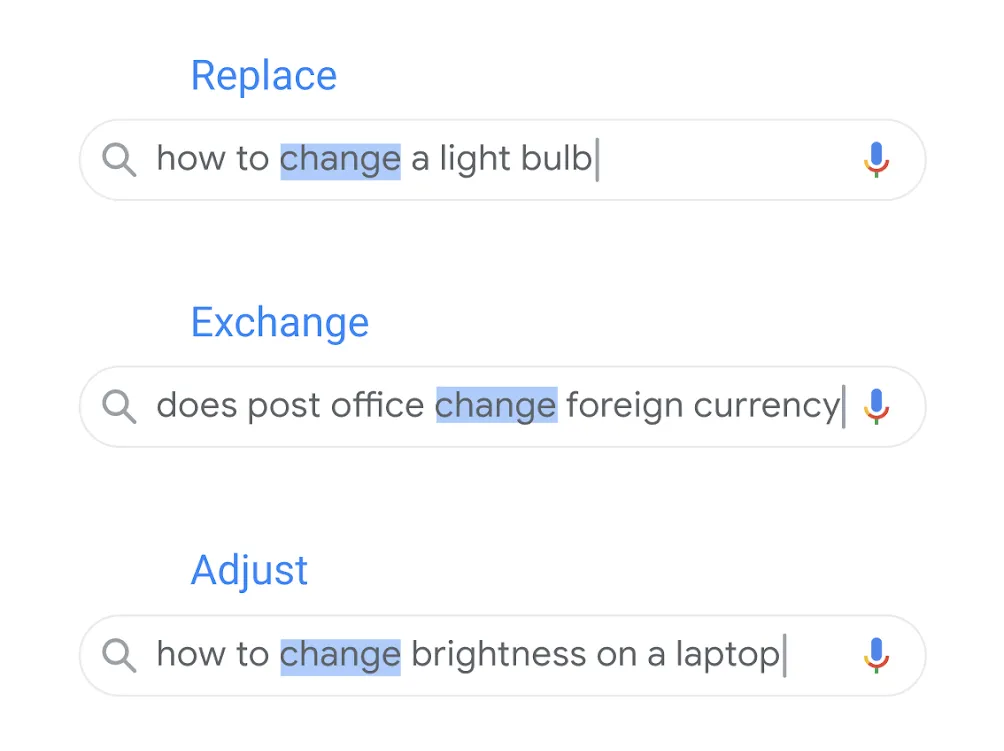

So searches like “How to change a light bulb” and “how to replace a light bulb” that have the same intent provide identical search results.

On the other hand, a search like “What’s a Jaguar’s top speed” has two different intentions (either the car or the animal), so Google will serve mixed results to meet either intent.

And if we switch this to “what’s the fastest type of Jaguar,” then Google now assumes your intention is to look for jaguar cars, not the animal anymore.

Google is predicting my intent by analyzing the meaning and connections between the words I use in my search, even if it’s a small word change.

Relevance of Content

Once Google identifies the intent of your search, they use a system that retrieves relevant information from its index.

This data can include:

- Direct keyword signals: Your terms (or close variants) in titles, headings, body copy, image alt text.

- Semantic signals: Related phrases and entities surfaced by neural-matching models, so “grain-free kibble” still connects to “dog food without grains.”

This is where Google checks which pages use words similar to your search (or close variations), then measures how well the page answers the overall topic.

So not only does Google use language processing to understand your initial search, but they use the same methodology to pair relevant articles to your intent.

They understand your intent and then find articles that meet that intent.

Our job as SEOs for a while now has been to act as a bridge for Google to connect relevant content to what the searcher is looking for.

Which boils down to:

- Prioritizing what your audience is actually searching for

- Putting together a resource that goes beyond the top ranking results (think why Google should prioritize your content over the others)

Relevancy is probably the most important part of creating content that ranks, but it’s a system many overlook.

Quality of Content

While there isn’t a defined EEAT (expertise, experience, authority, trustworthiness) score for all queries, they do highlight EEAT as a way of creating helpful content:

While E-E-A-T itself isn't a specific ranking factor, using a mix of factors that can identify content with good E-E-A-T is useful. For example, our systems give even more weight to content that aligns with strong E-E-A-T for topics that could significantly impact the health, financial stability, or safety of people, or the welfare or well-being of society. We call these "Your Money or Your Life" topics, or YMYL for short.

This part of the system is mostly designed to produce results that are accurate and helpful to the user.

As Google mentions above, YMYL are queries they absolutely have to serve the right results for.

Whether it’s a query that can impact health, financials, or well-being, the last thing Google wants to do is return results that can negatively impact the user.

Usability of Content

When Google is evaluating results, they also look at the usability of a page.

They want to serve the best resources to searchers, so it makes sense that they prioritize sites that load quickly and work well on mobile devices.

Pro tip: Google mostly crawls sites using mobile-first indexing, so mobile usability should absolutely be a priority for your site.

Context and Settings

The context of Google Search can primarily boil down to personalization, where Google uses signals like location, search history, and current events to pull together results that would be most relevant for you.

So if you’re based in Chicago and searching “football” while a Bears game is going on, Google will likely serve a result of the game’s current score.

Now, How Do LLMs Work?

Now that we know how Google works and uses language processing in their systems, let’s talk about Large Language Models.

While I’m not an expert on machine learning, I have done a fair amount of research to cover this.

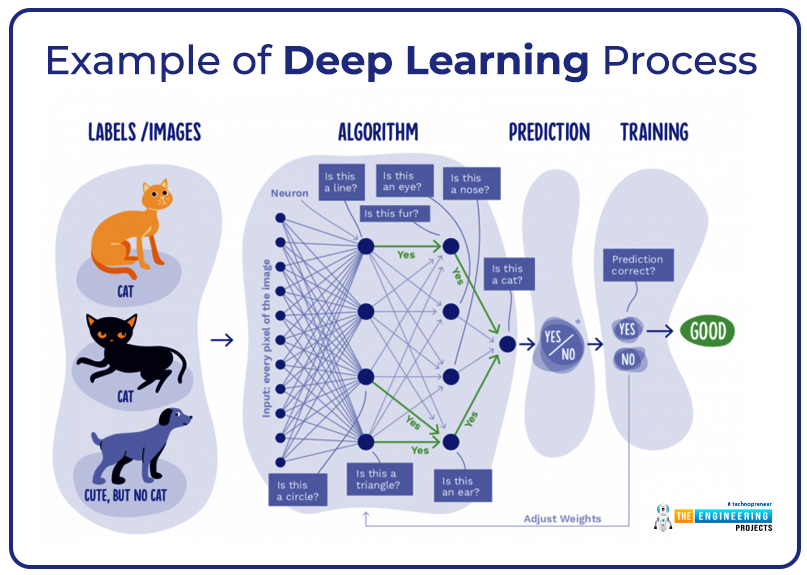

There’s no simple way to describe LLMs, but they’re basically large models that are built on the foundations of machine learning, which is the process of training a computer to recognize patterns and make predictions based on available data.

Take email spam filtering, for example.

Spam filters are systems that rely heavily on supervised machine learning, which is trained on large datasets of spam and non-spam emails.

By reading and analyzing each type of email for indicators like certain terms or phrases, filters can begin to identify and predict which emails should be marked as spam.

While machine learning can encompass a large variety of tasks and handle solving simple predictions, deep learning enables machines to solve much more complex tasks.

This is because of neural networks, which are systems of interconnected nodes organized into deep layers, hence the name deep learning.

With neural networks, each node has its own task to process the initial input and pass it on to the next node, which does the same thing until it produces an output prediction.

These deep learning models are built to handle much more complex tasks than just marking emails as spam or not spam.

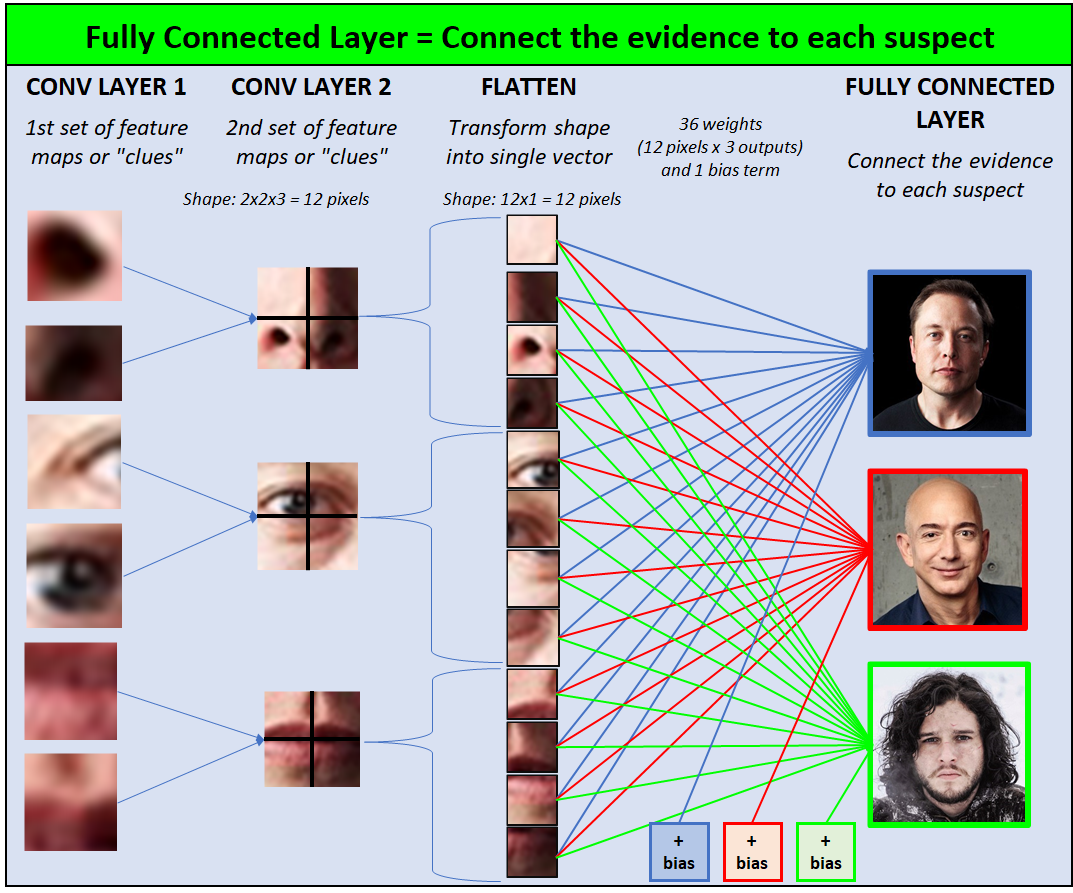

A common example of deep learning is facial recognition technology.

In this scenario, the neural network is trained on an extremely large dataset of faces to be able to extract information such as features, colors, and shapes, which can then be used to verify the identity of an input image.

Another type of neural network is a transformer, which is an architecture developed by researchers at Google in the paper “Attention is all you need.”

While initially built for translation, a transformer is a type of deep learning model that uses an attention mechanism to understand the context and dependencies of each word in an input and can generate an output based on the task.

An example of this could be text summaries. The transformer will read an entire document, process the most important takeaways and then generate a short text with those main points.

Now with all that, we can finally talk about LLMs.

We’ve already covered the core concepts, but with an LLM, these models are trained on massive data sets like books, articles, and even the entire web.

The sheer depth and variety of data provided enables LLMs to understand human language based on the billions of examples they’ve seen.

And this ties together nicely with OpenAI’s GPT models, which just stand for generative (decoder part of the transformer architecture), pre-trained (the large data set it’s been trained on), and transformer (the neural network architecture that powers this model).

Google Search vs LLMs

So even though that was my dumbed-down attempt to explain a complex topic like LLMs, how are Google’s search algorithm and LLMs similar?

Here are a few similarities we can highlight:

Same Transformer DNA

As mentioned in the previous LLM section, transformers are a key part of LLMs.

Again, it’s right in the name of GPT.

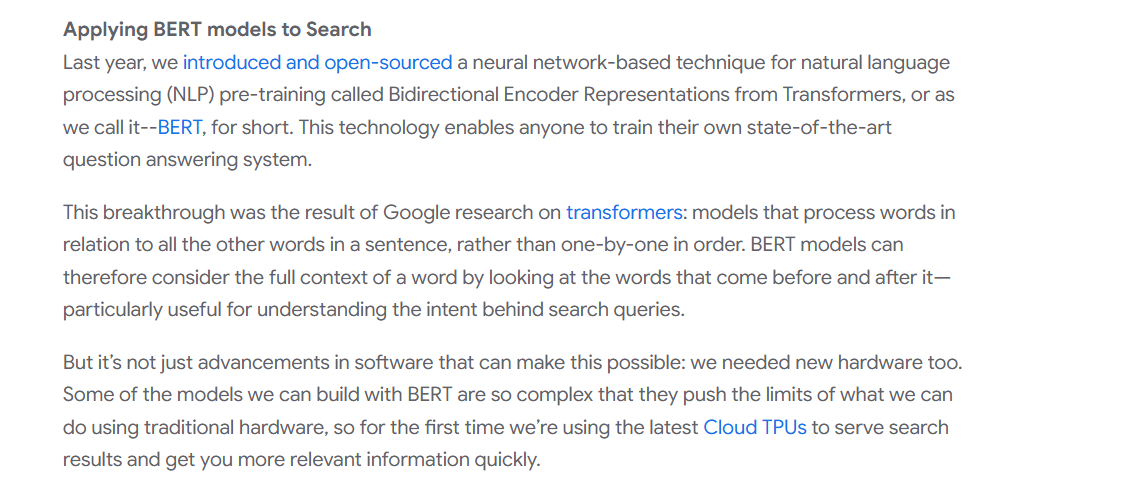

But the thing most people neglect is that Google was an early pioneer with transformers.

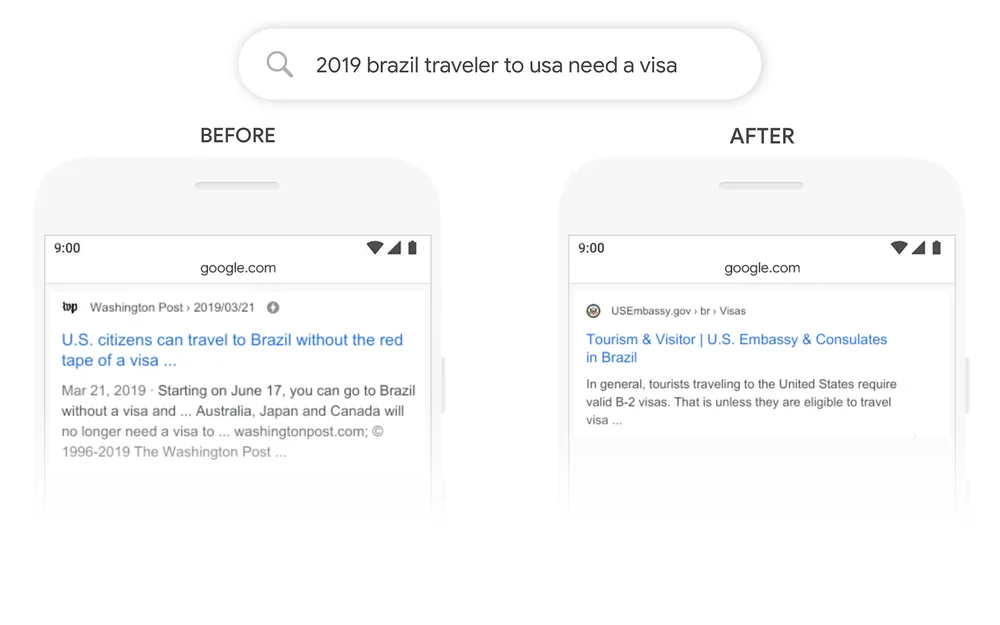

While GPT-1 came first in June 2018, Google released its own transformer, BERT (bidirectional encoder representations from transformers), in October 2018.

This system allowed Google to understand the full intent behind a query by considering the context of a word by looking at the words used before and after it.

But when talking about the similarities between LLMs and Google Search, a lot of it comes down to language understanding.

Both LLMs and Google Search use tokenization to split your words into chunks and then embed them as a numerical value into a dense vector, which allows both models to connect the dots between related words.

So instead of trying to understand each word manually, the transformer will use its attention mechanism to compare those vector embeddings to basically calculate the relationship between your input query.

So with your input or search, are there any key words being used that indicate the intent behind your search?

It’s a core feature of LLMs, but also something integral to Google Search.

Retrieval and Generation

While this isn’t a core distinction, both systems are built using a large corpus of data.

While Google maintains a large index to retrieve documents for their outputs, LLMs are trained on large databases of text.

And a large portion of this text came from the web itself.

While Google focuses primarily on retrieval from its index to serve results, LLMs use all text found on the open web to help train their language model to generate outputs.

But now, with AI overviews and RAG, the process between retrieval and generation is starting to overlap.

AI Overviews, AI Mode, and RAG

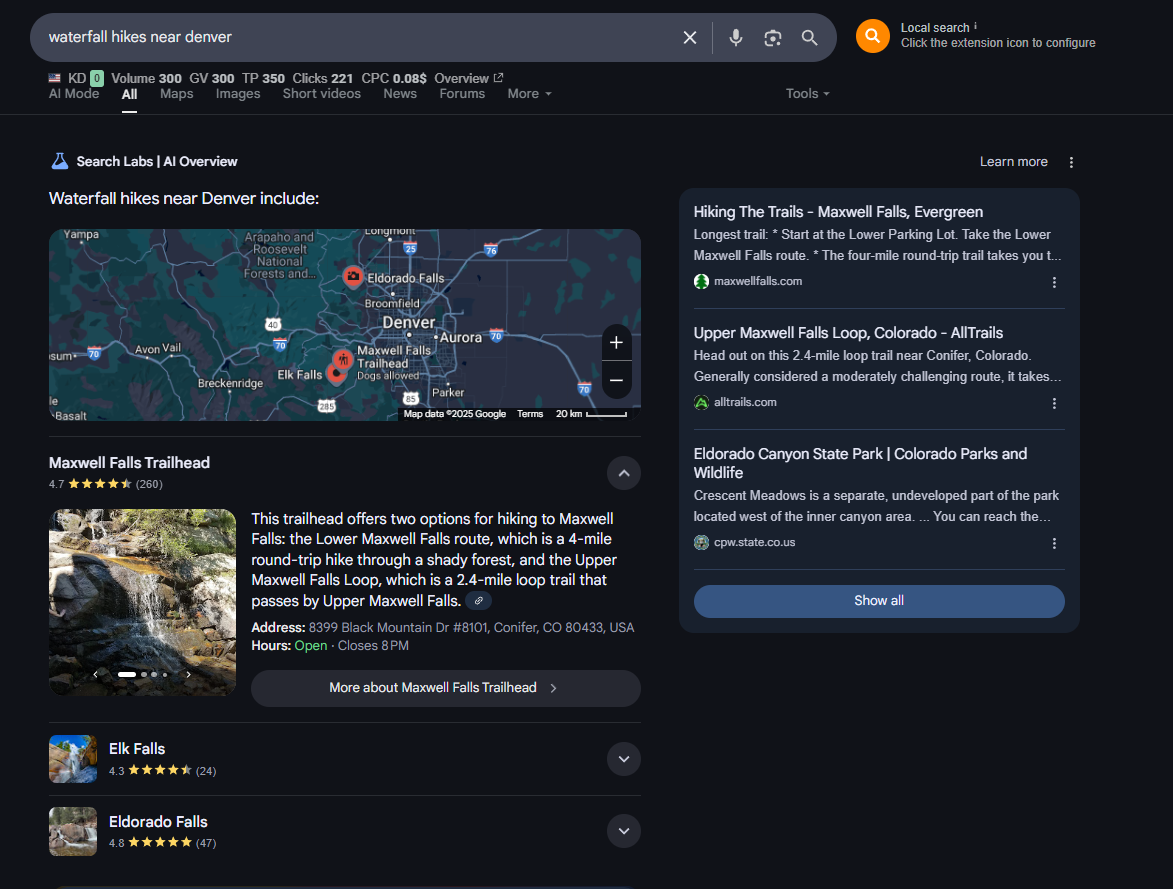

One of Google’s recent large rollouts was their AI overviews.

While these AI overviews have received mixed feedback, they now include LLM text generation in their results.

Source: Growth Memo

Google primarily used to retrieve results based on their index, but now their system uses the index to generate summaries as well.

A popular use case for LLMs is RAG (retrieval augmented generation), which is essentially an external knowledge base that LLMs can use to influence their output.

A common example is a customer support LLM referencing a company’s FAQs, return policy, and the customer’s purchased item to provide specific policy information or next steps.

This is a very minor example using a company’s internal knowledge base + recent orders.

Now, imagine Google’s entire index of billions and billions of pages acting as a retrieval database for your input.

So while Google has always been the standard for retrieving results relevant to your query, they can now combine the process using their AI overviews to:

- Retrieve documents from their index that are relevant to your search

- Have Gemini (their flagship LLM) retrieve relevant information from their index and generate a summary for the user

Google has realistically always been a pioneer in AI, going back to 2018, but it’s almost as clear as day the direction they’re heading in.

Google has and still is a leader in terms of search, but now with their crawling algorithms and index in place, they can use that system to power their LLMs for a new type of search:

AI augmented search.

Just look at their recent AI mode feature, which removes the SERPs entirely and creates more of an LLM experience for users, which is very similar to Gemini’s interface.

This system now allows Google to literally blend their traditional retrieval systems by using their index for RAG to generate summaries for a user.

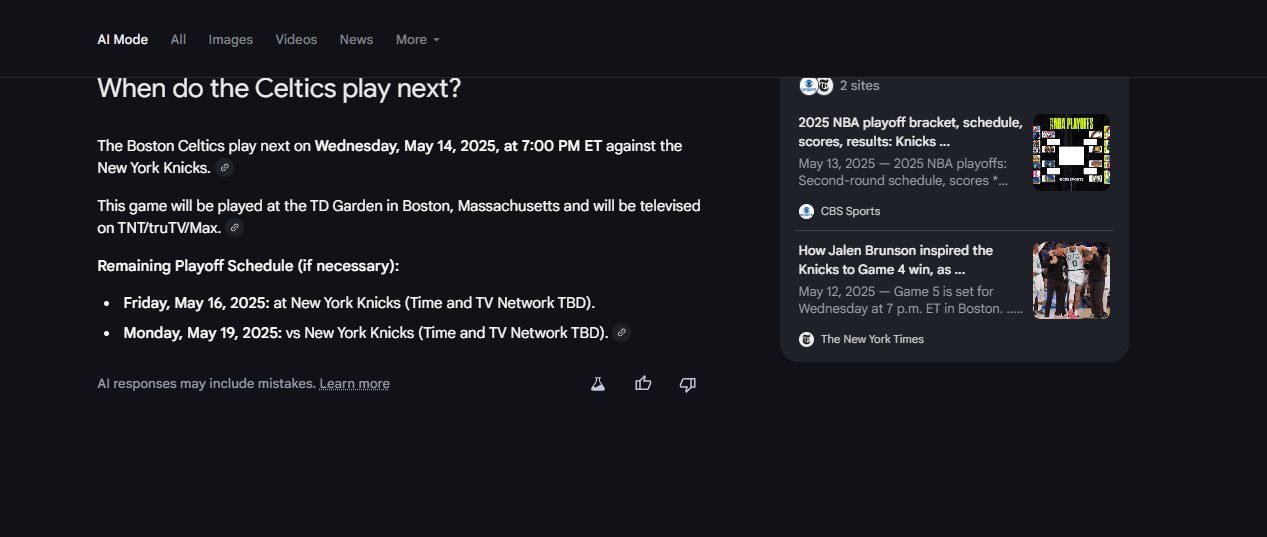

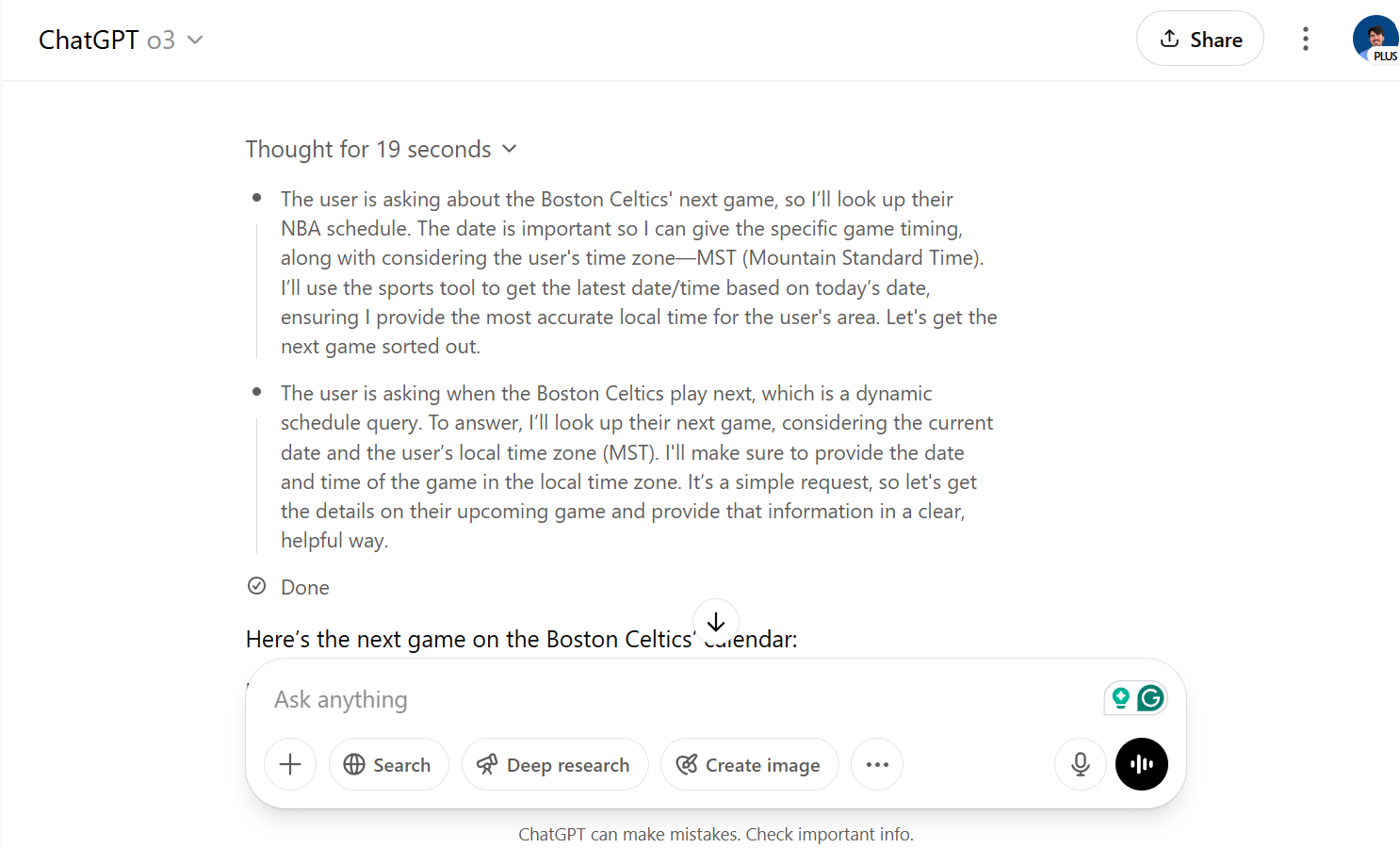

If I search “when do the Celtics play next?”, the system will break down my search (like it normally does) and then retrieve relevant documents from its index to then generate an output.

Since a main pro of Google has been live updates, this retrieval system pairs extremely well with RAG and generates a real-time output.

Google’s AI mode is still in the testing lab, so it hasn’t rolled out publicly yet, but it’ll be interesting to see how it’s fully implemented (especially with ads since 77% of their revenue comes from ads)

But either way, Google is in a prime position to blend their traditional search model with an LLM interface.

While Google is a search engine merging with an LLM, there are also LLMs that are now turning into search engines.

Since LLMs are pre-trained on existing data, you would have to keep fine-tuning the system in real time to be able to reference current information.

It’s a reason why the majority of LLMs now have a search feature.

LLMs can now use RAG to access search APIs to find current information from search engine indexes and ranking algorithms.

A common example of this is ChatGPT’s web search feature, which commonly uses Bing’s search API to retrieve information.

While traditionally, you’d have to check off the “search feature” manually to search the web, most LLMs are introducing reasoning models and agents that can perform the search without you even telling it to do so.

And this is likely where LLMs and search engines are heading:

An AI agent (whether OpenAI, Google, Anthropic) that can generate like a typical LLM while also retrieving relevant/current results like a search engine.

We are basically seeing both sides of the coin merging into one destination.

A real-time search chatbot.

What This All Means

While there was a lot to cover here, we can summarize it all up by saying:

- LLMs and Google might share quite a few similarities in the near future

- Google is looking to overhaul its search model in favor of AI search

- LLMs will likely improve their search features, leading to these LLMs chipping away at Google’s market share

With all of these hefty changes happening with Google Search and LLM usage, it begs the question….

Is SEO at Risk Because of GEO?

There’s a lot of panic around SEO being taken over entirely by GEO, but that panic is misguided.

GEO isn’t a threat, it’s an opportunity.

I’ll explain why.

Let’s be real: when we’re talking about SEO, we’re usually talking about ranking on Google specifically.

But the thing people fail to realize is that Google is a monopoly.

In 2024, a US judge labeled Google as a monopolist in its search engine business.

In 2025, another US judge found Google to be a monopoly in its advertising business.

So why is that important?

Monopolies will always be anti-consumer.

But when there are barriers to entry and a healthy mix of competitors, it’s usually the consumer— or even businesses in this case—who benefit most from it.

How? They’re not bound by a single search platform anymore.

Traditionally, with Google, all you had were the organic blue links and the paid ads. That’s it.

If you didn’t rank in those blue links, then you weren’t getting any visibility.

Even if you rank in position #6, you’re barely getting any clicks, let alone business, compared to the top 5.

Almost 30% of clicks go to the #1 result.

Not to mention the biggest hurdle, which was having users sort through multiple sites to find the answers they’re looking for.

Now with GEO, there are way more options for visibility.

Even better is that visibility isn’t coming from bias (even though it still somewhat is).

When the user receives that output, they’re not sorting through sites to get it.

It’s coming directly from an LLM that generates that output based on the sources it has available.

So the user receiving that result will be much more likely to consider the options being displayed.

Not only does GEO provide an awesome benefit in this way, but the content impacting LLM results is likely still being found through search, too.

Either way, we’ll see if Google is forced to sell Chrome, but more search engines mean more opportunities.

We’re not bound to a singular algorithm anymore and now have WAY more reach with getting brands visibility. Either through:

- YouTube videos and Reddit threads featured on the SERPs

- Perplexity, ChatGPT, and Claude

- AI overviews

- The blue links, still

So, while there is a lot of change happening at the moment, it’s actually a great time to be a marketer right now.

SEO is not going anywhere, and even if it did, there is still plenty of room for opportunity.

Tactics That Actually Get You Featured in LLMs

With all that said, let’s talk about the tactics that I’ve seen work for getting you featured in LLMs.

Just like SEO, there are no guarantees that you will be featured in an LLM.

We’re just increasing the likelihood of you being included by feeding the machine.

Some LLMs like Claude and Llama (Meta’s LLM) rely primarily on training data for their results, which means newer content you create won’t have much of an impact.

However, this should be changing with Claude as they’ve also included a web search ability within their model.

Since more and more of these tools are adding search features as a core part of their models, each one will rely heavily on a search engine’s index.

ChatGPT, for example, relies almost entirely on Bing's index, with 87% of citations matching the top results. Gemini, of course, has Google’s large index to work off of.

So both ChatGPT and AI overviews rely very heavily on what’s currently ranking on the first page.

But still, for LLMs, how a user engages with it is going to be significantly different than a typical Google Search.

For Google, the user typically has to condense their thoughts into a long-tail search that may or may not be specific.

With LLMs, the user will not only provide more context to their prompt, but LLMs are now starting to save your entire chat history to provide more personalized responses.

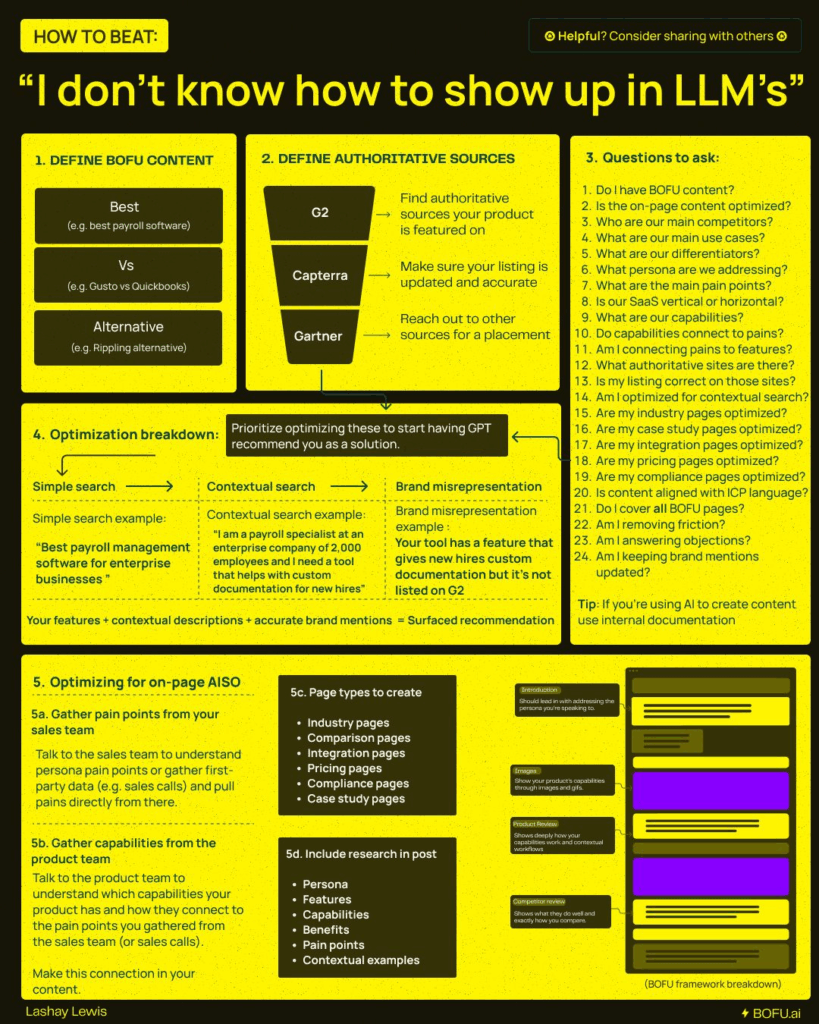

Because of this, you’ll really have to nail down your ICP and put together:

- Who they are (role, industry, title, etc.)

- What problems they currently face

- How they may seek out solutions to those problems

Once you have that down, you’ll now help LLMs connect the dots of your product and brand through content.

This will either be onsite content that defines:

- Brand Information: Our pricing info, brands that use our product, and common integrations

- Product Information: Specific features, what industries benefit most from the product, specific use cases of the product, and what problems the product helps solve

Or off-site content (which basically boils down to PR) that looks for:

- Positive and negative sentiment about your brand

- Brand mentions and the context that goes along with it

Although a heavy disclaimer that this is still 95% SEO.

Just a slightly different approach.

1: Brand-Related Content

If you want to see success with GEO, the first step is to focus on branded content.

The idea behind this is to create comprehensive content that clearly explains who you are as a brand.

You need to answer the questions people are already asking:

- Is your product worth the cost?

- What does your brand stand for?

- Are there any issues with your brand?

- How does your pricing work?

This content becomes crucial when users are in the initial brand research phase.

But I don’t just mean creating a generic “about us” page.

You need to craft pages that provide a comprehensive look at your brand, which means having:

- A pricing page that clearly defines your pricing

- Case studies that show results you’ve delivered for other companies

- Common integrations that prospects ask you about

- FAQs that address common problems your audience faces

The goal of these resources is to have an LLM properly draw from your brand when a user is researching your brand.

They aren’t doing a simple search, and with the addition of deep research, they’re going to have way more resources to pull from.

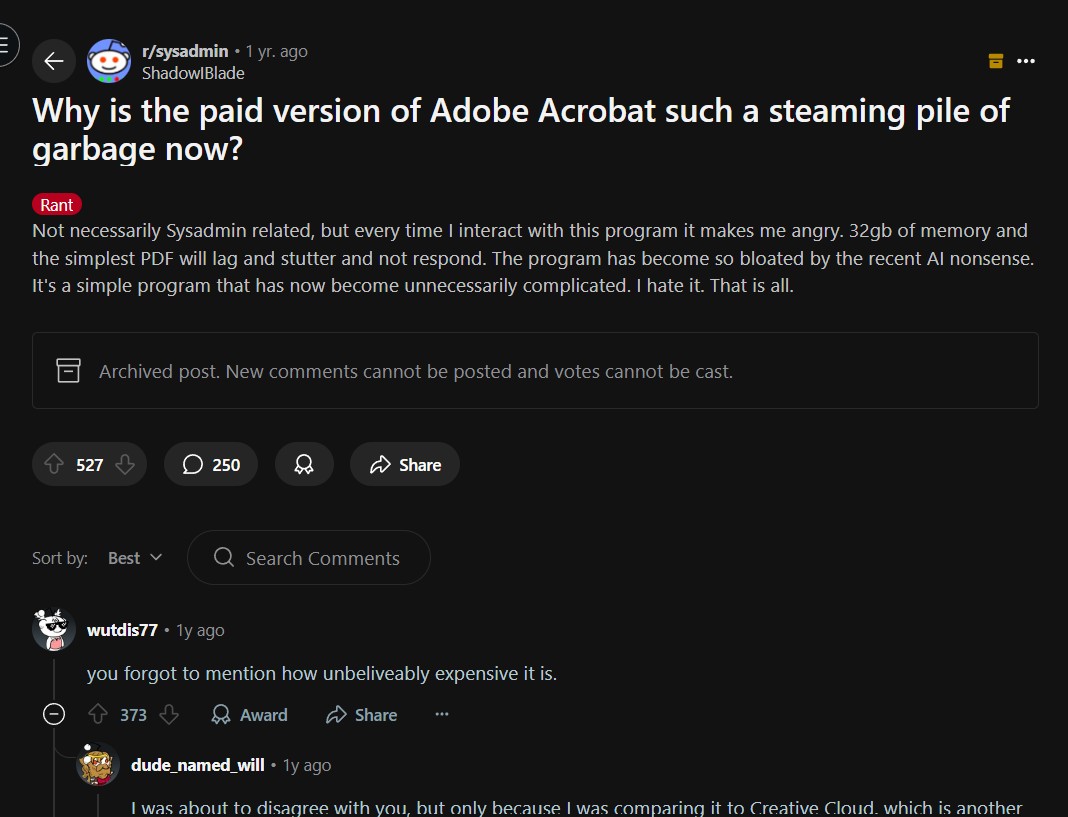

Instead of having an LLM pull from a random resource like G2 or Capterra, you want to control the narrative and make it crystal clear you’re answering the questions that are tied specifically to your brand.

Honesty matters here —you’re not going to get away with hiding flaws from LLMs.

They aggregate multiple sources, so you have to address criticisms and common questions head-on.

When users ask ChatGPT, "Is [Your Brand] worth it?" your content needs to directly shape and influence that answer.

Otherwise u/CptButtChugger_420_69 from Reddit is going to answer it, and you may not like that answer as much.

2: Original Data & Statistics

LLMs absolutely love statistics—especially current data.

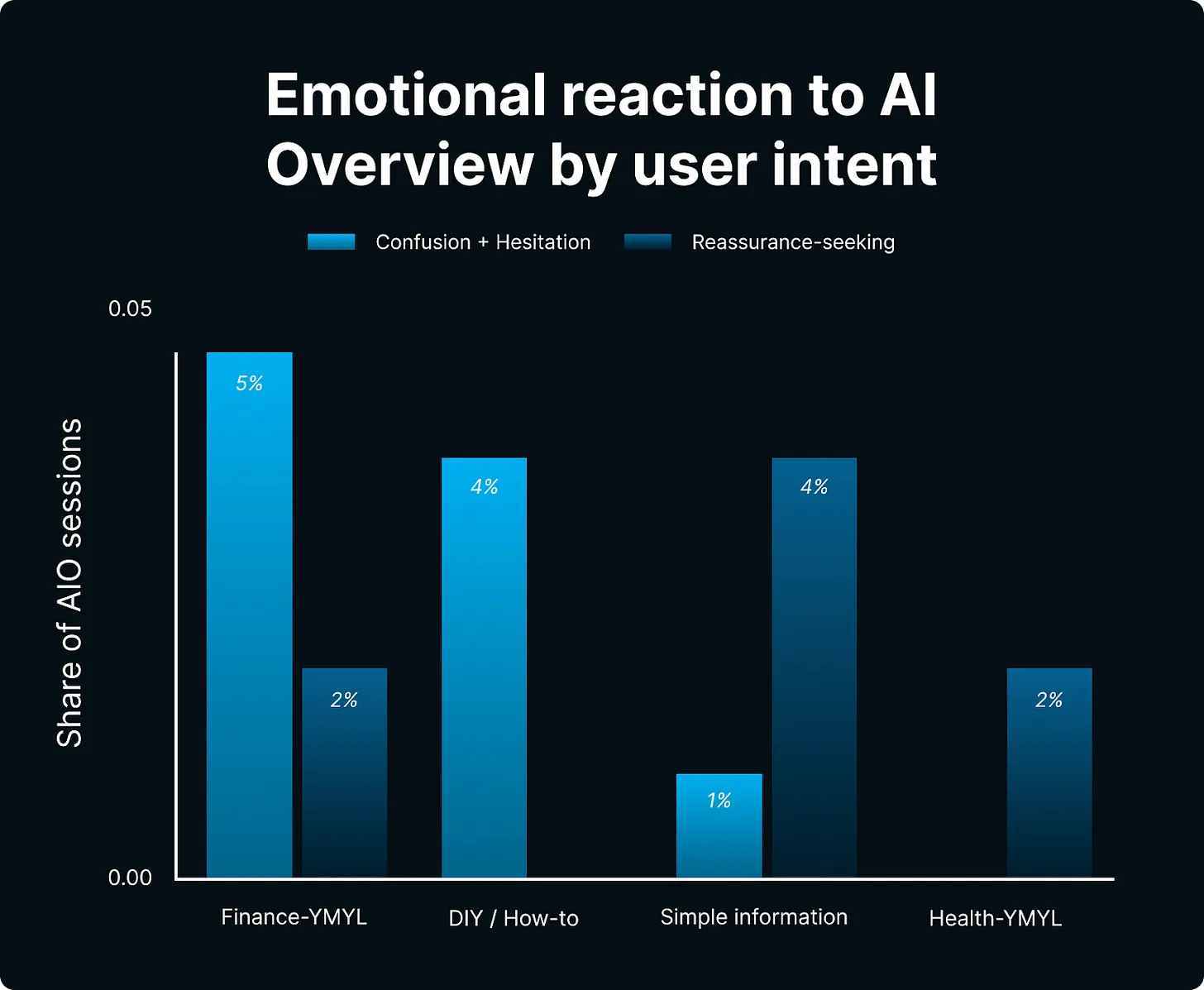

In fact, in a study of over 10K queries, quotes and statistics were shown to drive the most visibility in LLM platforms.

When you publish original research, you get dual benefits:

- LLMs cite your data in their responses

- Those citations are formatted so users can plug them directly into their content (giving you a link)

This creates visibility AND builds backlinks simultaneously.

It's one of the easiest wins available.

But not all data is created equal.

Data from surveys, case studies, and proprietary research is referenced most often.

So, create annual industry reports that become go-to references.

For example, if you're in marketing:

- Run a survey of 1,000+ marketers about current tactics

- Package your findings into a digestible report with clear, quotable statistics

- Update your data consistently to maintain relevance (older stats get deprioritized by LLMs in favor of recent findings)

- Include unexpected insights, surprising statistics, and new perspectives that challenge conventional wisdom

- Make your methodology transparent so LLMs recognize you as a credible source

- Add timestamps to all research to signal freshness to both search engines and LLMs

And when sharing data, remember that specificity wins:

- "73.4% of marketers increased AI spending in 2025" beats "Most marketers use AI"

- "Average ROI increased by 142% over 6 months" beats "Results improved significantly"

Once you’re done, publish your data in multiple formats, but don’t gate all of them.

You want some visibility so search engines and LLMs can still draw on them.

And blog content accessible in HTML is the best way to go about this.

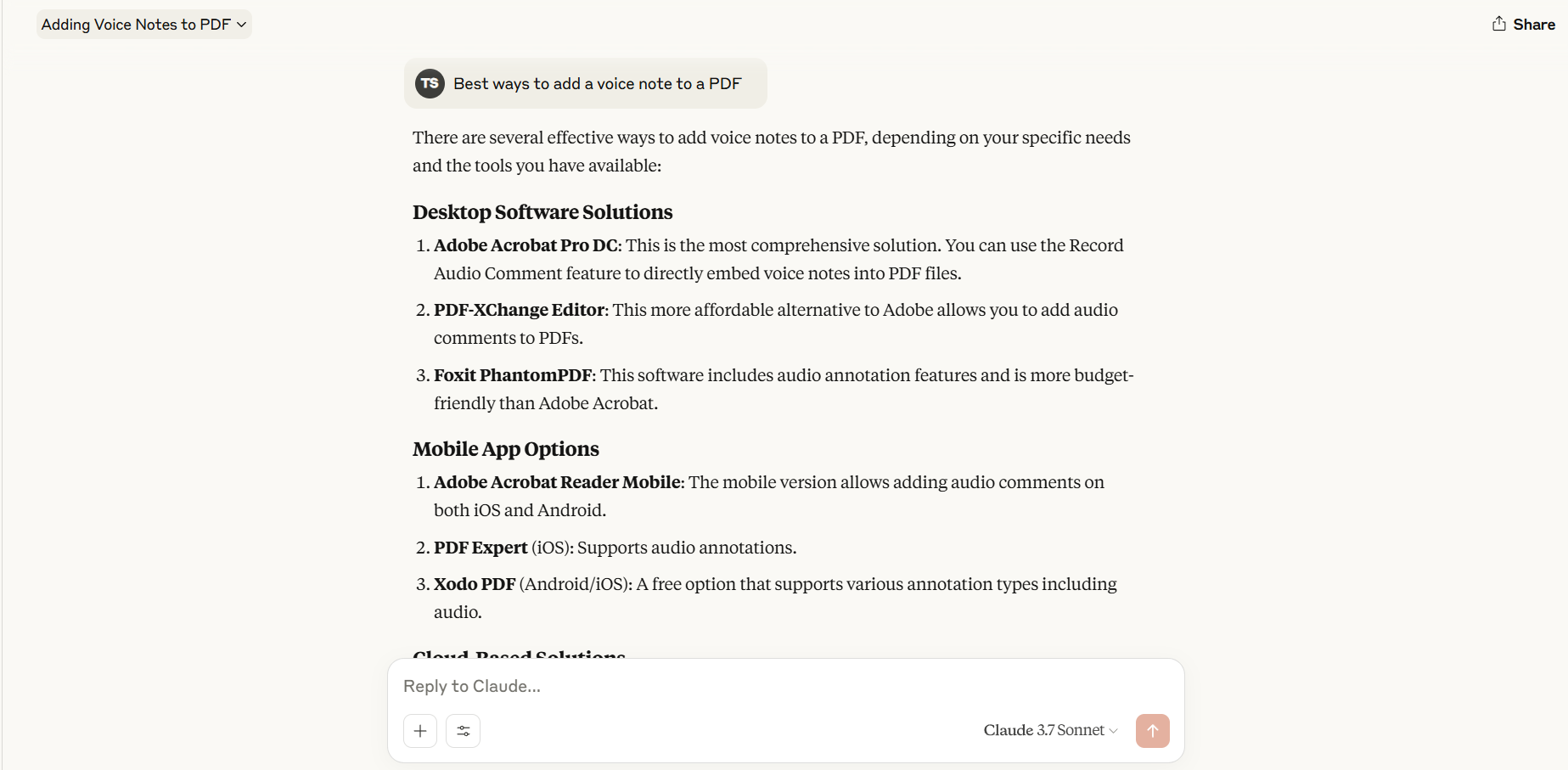

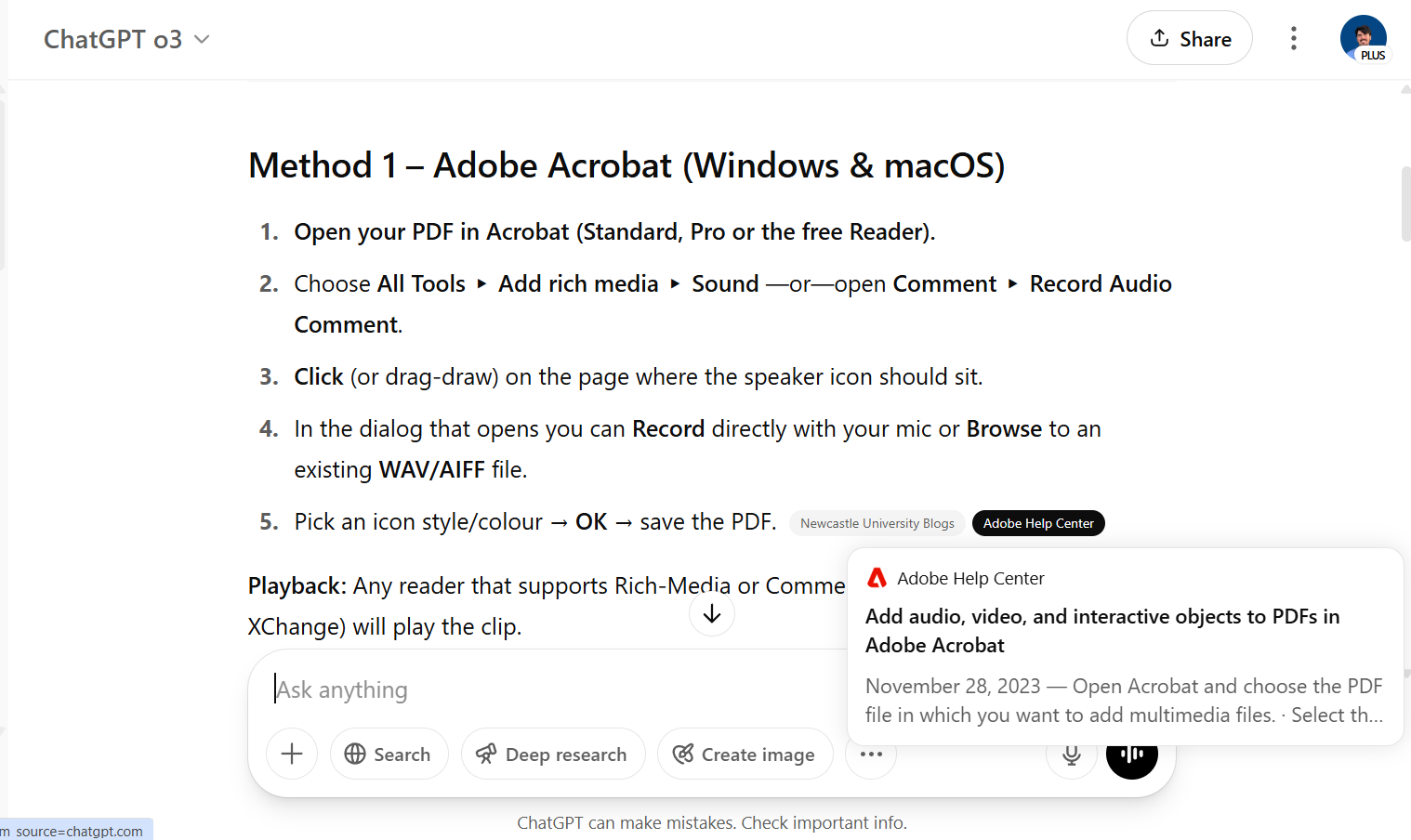

3: Product-Specific Content

After covering branded and data-focused content, it’s time to focus on creating content specifically about your product.

Our goal here is to develop detailed, actionable “how-to” content that walks users through your product’s potential use cases.

For example: "How to Add Voice Notes to a PDF Using [Your Product]"

When someone asks an LLM how to add voice notes to PDFs, it can clearly identify that the search intent matches your content’s intention.

Then, the LLM can source your product guide in its solution for the searcher.

The LLM will reference you as a viable option and even provide the how-to steps directly from your article.

Most sites do this in the form of product documentation, but you’ll need to go the extra mile with these pages.

LLMs even tend to pull from knowledge bases compared to just scanning a main site when answering how-to questions.

This creates a double win - your product gets mentioned AND your exact process gets shared.

But again, this is very much SEO with an added bonus.

When you create product-led content, just keep in mind that you should:

- Make these guides incredibly specific and action-oriented.

- Break processes down into clear, numbered steps with screenshots.

- Cover every possible use case for your product, no matter how niche.

- Create separate guides for different user levels (beginner, intermediate, expert).

Think of each “how-to” as a mini-demo of your product.

This isn’t typical product-led content where you position your tool as one of the options.

It should be an entire walkthrough of that specific feature of your product.

The more specific you are about how your product works, the more likely an LLM will reference it in its output.

4: Niche Category Positioning

But wait: there’s more.

So far, we’ve covered:

- Branding-related questions

- Product-related questions

- The importance of original research

Now, we’ll get into specific positioning for your brand and product.

The goal of category positioning is to make it extremely clear to LLMs:

- Who your product serves

- How it differs from alternatives in your space (both in features and positioning)

We’re positioning your product as an option for your ICP, who will provide way more information in their LLM chat instead of just “best project management software.”

You want to stay away from going broad anyway since you’ll be competing with industry giants like Asana, ClickUp, and Monday.com.

You’re much better off getting specific with your positioning, like "Best Project Management Software for Consultants."

The more specific your category positioning and the more you label yourself as the best option for this industry, the higher your chance of appearing in AI responses.

Especially if other competitors in your space also start including you in their own “best project management tool” articles and label you as the best option for consultants.

Do the same thing around your competitors with alternative content, too.

Create content positioning you as the best ClickUp alternative, but specifically for consultants.

And while this may mostly apply to bottom-of-funnel content, it can apply to topic coverage too (wink topic authority wink).

Become the definitive resource for that specific niche.

Interview your ICP so you can learn what they care about, their pain points, and what topics to target.

Then, create content tailored to your niche and audience.

This includes product-specific how-to content (how consultants can use [your brand] to do xyz).

But you also need to cover typical non-branded, job-to-be-done content like “how consultants can improve their workflow efficiency.”

Remember: being specific wins with LLMs (and SEO, too, but no one likes to admit that).

Don’t forget industry pages, too. You want an LLM to reference who your product benefits most.

Don’t cover every industry out there; just hyper-focus on your main horizontal audience (B2B SaaS) and verticals that fall underneath it (i.e. martech, legaltech, etc.)

There’s a lot you can do here, but this can be summarized by:

- Creating BoFu content like competitor alternatives, category content, and competitor comparisons

- Build out feature/use case pages that highlight the high-level of what your product can do

- Create industry pages highlighting which niches benefit most from your product

- Build out specific how-to content around your niche's pain points

DOES ANY OF THIS SOUND FAMILIAR?

5. Authority signals work slightly differently.

With SEO, backlinks remain a dominant ranking factor.

With GEO, brand mentions carry more weight—with or without links attached.

You don't need a hyperlink to gain "page rank" in an LLM.

If multiple sources mention you as "the best PDF editor for Mac," LLMs start to recognize this pattern.

The more third-party content referencing you within a specific category, the higher your chances of being featured.

We even saw this in a recent case study published by George Chasiotis of Minittia.

They were able to get one of their clients, InboxArmy—an email marketing agency—featured as one of the top results in Perplexity, AI overviews, and ChatGPT for multiple searches like “best email marketing agencies in 2025.”

All they did was:

- Published “best email marketing listicles” across dozens of relevant publications

- Got quotes from people within the company featured in well-known publications

By doing this, they gave LLMs more context around this brand that didn’t rely on their website.

Outside of publishing the listicles, a majority of this just boils down to good PR.

So:

- Focus on generating quality mentions across diverse sources.

- Get featured in industry publications, even if they don't provide links.

- Participate in podcast interviews where your expertise gets verbally referenced.

- Be active in Reddit threads where your brand is being discussed

- Get your customers to leave testimonials on different review platforms

More than anything, authenticity matters more than volume.

And stay consistent with your messaging across all platforms.

Authenticity matters more than volume—artificial mentions get filtered out by sophisticated LLMs.

So, What’s Actually the Difference Between Optimizing for LLMs and Search Engines?

It really just comes down to approach.

The optimizations are practically the same.

It’s just the strategy that’s different.

With SEO, you primarily focus on non-branded searches.

So that means:

- Predicting problems your audience has

- Creating content addressing those problems

- Optimizing for those search terms

Here's a quick LinkedIn video I made talking about exactly this.

But most importantly, the user still has to click site-to-site to find information.

While there are plenty of branded searches (in fact, 44% of all Google searches are branded), branded content directly influences LLMs as we’ve mentioned before.

So with GEO, the strategy shifts more toward brand and product marketing.

Meaning:

- The user has more room to add context to their search and give more specifics about what they’re looking for

- The user is much less likely to click on a site, and instead is more influenced if your brand is mentioned in a relevant buying context

- Making sure LLMs have plenty of context around your brand and product features

Focusing on PR around your brand

I can’t stress enough how important it is to know that GEO is forcing SEOs to break away from their siloes and become full fledged marketers.

SEO will still handle non-branded search traffic, but GEO opens the playing field to a much more holistic strategy than just optimizing for rankings.

Is GEO Here to Stay? Where Search Is Headed Next

Generative Engine Optimization is absolutely here to stay.

But it won’t replace SEO entirely.

It will reshape user search behavior, with people using both Google and AI assistants in their buying journey.

Here's how I see the future breaking down:

- Google will remain stronger for: Transactional queries and expertise-driven content

- LLMs will excel at: Informational queries where quick summaries provide immediate value

Non-branded searches targeting middle and bottom-funnel prospects will continue to drive value through traditional SEO.

Meanwhile, GEO gives you a new opportunity to double down on brand content.

You now have two distinct channels requiring slightly different approaches.

The marketers who adapt quickest to this dual approach will gain a significant competitive advantage in the coming years.

This isn't about abandoning SEO.

It's about expanding your toolkit to capture attention wherever your audience is searching.

.png)